Here is a very brief update on what I've been doing lately, in no particular order:

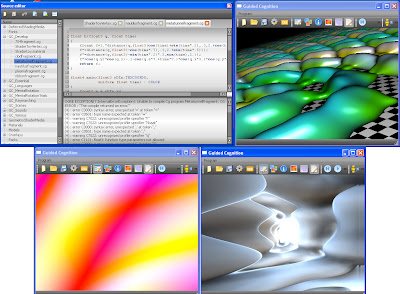

- Started working on a deferred particle renderer node, but had to cease the work after some unexpected/unexplained GLSL shader behavior: a vertex texture fetch only seems to be able to access one or two texels in one corner of the texture. I am not sure if this is because of a bug in my code, Ogre 3D, OpenGL/GLSL, or Parallels which I am using to host a virtual WinXP development environment on my MacBook Pro. Posting a question on the Ogre 3D forum did not yield any response; and I currently do not have a working Ogre 3D example that uses GLSL and vertex texture fetching. I think my next step will be to port all my GLSL shaders to HLSL or CG, and see if that makes a difference.

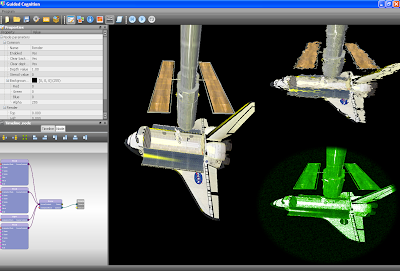

- Extended the tool and framework with support for stereoscopic rendering (currently only using Direct3D since the OpenGL drivers do not seem to support multiple monitors on Win XP):

- Dual output, for use with Head Mounted Displays, or two beamers with polarized filters,

- Anaglyph, for use with a single screen and red/cyan glasses,

- Autostereoscopic, for use with 3d TV's that support horizontal, vertical or checkerboard interlaced signals.

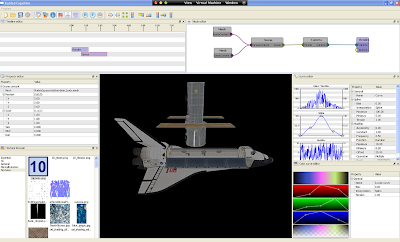

- Worked on a proof of concept demo for my academic advisors. Basically this entailed making sure that a simple landscape 'flyby' scene works, and that my Delft University of Technology thesis project works.

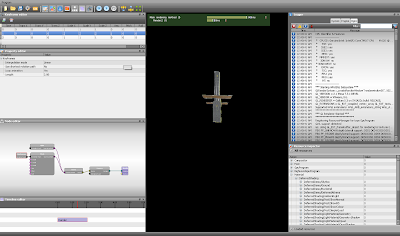

- Added exception handling support to the codebase, which prevents the tool from crashing without any sort of warning and/or log message. For instance, if you load a previously created visualization from file, and an error occurs during loading, an error message will be shown and the affected modules and nodes will not be loaded.

- Switched to Ogre 1.8. Using CMake build system now allows me to generate builds for Windows or Mac, using Ogre 1.7 or 1.8, and using a variety of types and versions of compilers such as microsoft visual c++, code::blocks, eclipse, gcc, xcode and so on. I still want to switch to using XCode and Mac based development environment, but my familiarity with MSVC's debugger is what keeps me coming back to using Windows. For now.

- Last but not least, I did some testing, testing and then some more testing. The shader editor seems to work OK now, including on-the-fly compiling and reloading of shaders. Yay :-D

Since I will be using this tool in my PhD research experiment(s), my todo list is partially dictated by what is needed to support that. But that should not amount to having to develop more than a node or two. In the mean time, I will be busy with testing the tool and framework, and working on the deferred particle renderer. I will get those @#$@# particles to work- I am not ready to give up just yet ;-)

... to be continued ...