I wanted to devote one post to providing an overview of the evolution of the 3d editor that I am working on. While engaged in your passion it is easy to lose track of how time passes by. While writing this post it struck me that a number of years have passed since I started working on this editor. Anyway, a rough functional distinction between the various versions can be made:

- Version 1: Using C++ for direct control of the DirectX API, and Microsoft Foundation Classes to create a GUI

- Version 2: Using C# to build an engine on top of managed Ogre as a rendering component, and use WinForms to create a GUI

- Version 3: Using C++ to build an engine on top of Ogre as a rendering component, and glue that to a WinForms based GUI programmed in C#

- Version 4 (in development): Extend the C++ engine to support node based compositing (besides timeline based compositing), and use Qt to create a GUI. Also, this version will be platform independent.

The remainder of this post will provide some basic information about each of the versions, accompanied with some screenshots.

Version 1: initial experiments and the beginnings of a game editorIt all started with some simple experiments done in C++ (using Microsoft Visual Studio 6) and the DirectX API, I think version 7 or 8 at the time. I had made a few simple programs which loaded and displayed a 3d scene, experimented with simple generation of landscape meshes and so on. When that went well, I went on to programming more elaborate routines, all of which basically were aimed at speed-optimizing rendering of a large number of triangles:

- Octtree based spatial subdivision, to determine which parts of a scene are on-screen and which ones are off screen

- Occlusion culling, to minimize the rendering of triangles that are further away from the camera and will be obscured by triangles closer to the camera

- Collecting all rendering operations into batches, and rendering those.

- Rendering meshes with adaptive level of detail: the further away from the camera, the less triangles will be used to display the meshes.

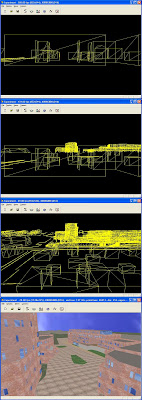

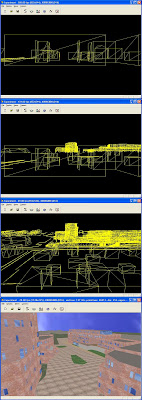

The screenshot below shows the octtree based spatial subdivision at work:

The screenshot below shows the occlusion culling routine at work. When the view of the city is obscured by a wall (top image) the geometry behind the wall is not rendered. As the camera rises above the wall (center images) more octtree cells containing geometry become visible. The bottom screenshot is included just as a reference to what the city model looked like.

I also built a graphic user interface around these features using Microsoft Foundation Classes (MFC). Below are a few screenshots of this version of the editor...

At this point in time I looked back at what I had accomplished and realized a few things:

- The direction that my editor was going in was more toward a game (level) editor than toward a 3d visualization editor. I did not like this direction. At all.

- Even though I thought that programming low level routines for rendering large amounts of triangles was a nice challenge, I did not want to spend my time "reinventing the wheel" as there are plenty of other solutions available. I started considering dropping in an existing open-source 3d engine to handle all the rendering aspects, so that I can focus on creating the editor that allows the workflow that I have in mind.

- Developing graphic user interface components in MFC took up a lot of time, more then was necessary. I started to consider switching to other environments that are more friendly to develop your own user interface components

Ultimately this resulted in my first complete rewrite of the editor...

Version 2: The limitations of C# and .NETAfter some deliberation I had made a few decisions:

- Use existing open source graphic rendering engines. I settled on Ogre3d because of its completeness in features, documentation and examples.

- Use C# and .NET for the graphic user interface, because that is what I had experience in.

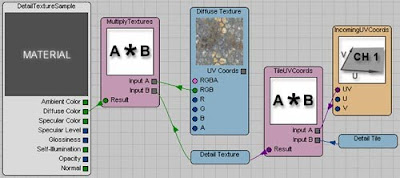

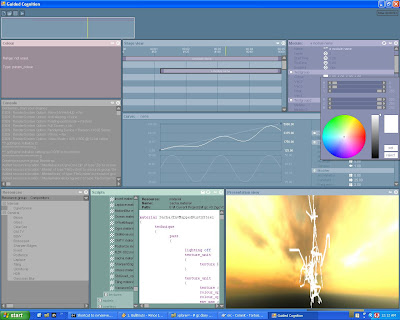

Here are a few screenshots showing the graphic user interface. Notice that most of the panels (as discussed in the previous blog post) are implemented: editors for timeline, scripts, parameters, splines and so on.

The decision to use C# had its good and its bad sides. The good side is that the ideas that I developed for how to structure the engine and the GUI are more or less completely intact in the current version of the editor, and that it did not take much time to implement all of them. The bad side is that I built everything in C#, which included using a wrapper for Ogre. Let's just say that at the time I did not think straight and was not able to extrapolate the limiting consequences of that decision. The most important of which were:

- Because of using a wrapper for Ogre, it always took a while before updates to Ogre were supported in updates to the wrapper code.

- The wrapper only wraps Ogre, not all of the additional plugins that you may want to use (e.g. OIS for accessing keyboard, joystick and mice from your code)

- If I wanted to make changes to Ogre, I had to make changes to the wrapper code as well.

So, after a few weeks of working on this version, I labeled it "a can of worms" and started considering yet another rewrite...

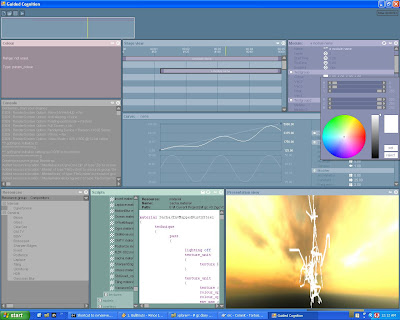

Version 3: trying to mix things that really should not be mixedFor some reason I came up with the idea that I could get the "best of both worlds" by using C# for creating the GUI, while using C++ for creating the engine. I would have to glue those two together using a tool called "Simplified Wrapper and Interface Generator" (SWIG). This part of the process did not take me that long. Finally I had direct access to all the goodies (plugins, examples etc) made available by the Ogre community, while creating custom GUI components was quite easy in .NET. At least that was what I thought... but more on that after the mandatory screenshots below.

While working on Version 2 and Version 3 of this editor, I had enrolled in a graduate study (M.Sc. specializing in Human Computer Interaction) at the Delft University of Technology in the Netherlands. I was lucky enough to be able to use this editor as a basis for my thesis concerning 'patient motivation in virtual reality based neurocognitive rehabilitation'. This proved to be an excellent case study to test my editor and engine. In order to create a game based rehabilitation exercise, I had to finalize or add a few bits and pieces to the engine:

- Saving and loading of engine states,

- Sound (using the FMOD library),

- Saving and loading of XML files, for data or configuration input and output,

- Get keyboard, mouse input to work,

- Get Wii Remote input to work, not only to use as a game controller but also used for headtracking,

- General robustness of the editor and engine, so that it runs reliably and stable on different computers.

The results from this work can be found

here [tudelft.nl] (this includes some screenshots of the final game, and a video that shows how patients would interact with the system using pointing and headtracking mechanisms).

However, I realized that there are some major downsides to having the editor/engine code separated across C# and C++. The most important one is that it is quite limiting to not have direct access to engine functionality from the editor. Everything has to be handled by proxy wrapper classes (generated by SWIG). There were several options to work around this, including to generate wrapper code for Ogre myself, re-integrate MOgre, or accept the current limitations. A discussion with the author of Yaose, a script editor for Ogre, pointed me in the direction of the Qt framework for creating GUI's. In my opinion this library is more flexible than C# and WinForms for creating GUI's, it would allow direct access to the rendering engine without the need for creating wrapper code, and as a bonus, it runs on multiple platforms. Since Ogre also runs on multiple platforms, this would mean that in theory, my editor could be configured to run on many different platforms (Windows, OSX, Linux, IPhone, ... ).

Version 4: Doing It Right(er)So recently I started yet another rewrite. But as with Version 3, it is a partial rewrite and partial extension of the codebase. This time I need to rewrite the GUI, but there is plenty of Qt example code available to get me started (a few days of work on the Qt version got me to the same point as a few ~weeks~ of work on C#/WinForms). Furthermore the engine codebase needs to be extended to allow node based compositing next to timeline based compositing. I am also contemplating setting the codebase up so that the engine can be separated from the underlying graphics rendering framework- so you could directly access OpenGL or DirectX instead of using Ogre as replacement middleware. This would facilitate using the editor for demoscene productions. I expect that version 4 of the editor will be operational and ready to be used in the summer of 2011.

However, I don't have any useful screenshots to show yet... And it looks like that due to university schedule I will not be able to spend much time on programming (if any) in the next few weeks. So the next major update will have to wait until the end of the year...

... but at least, progress is still being made!

:-D