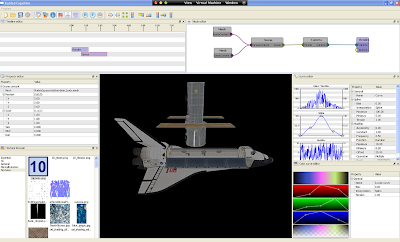

- Implemented some basic nodes: Camera, Scene, Light, Mesh, Plane, ParticleSystem, RenderTarget

- Render to texture: it is now possible to construct a scene and render it's output to a texture (= a rendertarget node). This texture can then be used as input to another render node.

- Testing, testing, testing and more testing. I've been randomly placing and connecting nodes, saving and then reloading them, disconnecting them, reconnecting them and so on, to check whether the program is stable and there are no memory and/or resource leaks. This is an ongoing (slow) process though, but it's getting there :-)

The following nodes and/or functionality still have to be implemented (listed in approximate order of importance):

- Scene import: import a 3d scene from one or more 3d tools (e.g. 3ds Max, Maya, Blender). Perhaps an intermediate format, such as dotscene, exists that each of these 3d tools can handle?

- Animated Mesh node: This node loads a mesh and it's accompanying skeletal animation structure. Through it's parameters you can then control which animation gets shown on screen (e.g. walking, running and so on).

- AnimationTrack node: This node can be connected to, and animate the position, orientation or scale any scene object (light/mesh/camera/particlesystem). This animation can be edited through the tool itself- which will display a list of keyframes and their associated values.

- Neural controller node: This node evolves a neural network to control any animated mesh. This is heavily inspired by the work of Karl Sims' work on Evolved Virtual Creatures, and the more recent 4k demo from Archee called Darwinism. The main difference is that these prior works use genetic algorithms to evolve the controllers, while I intend to use artificial neural networks- more specifically, Ken Stanleys' Neuro evolution of augmenting topologies.

- PostProcessing node: Although Ogre3d achieves postprocessing effects using a compositor framework, I am contemplating whether it would be better/easier (for the kind of virtual environments that I am targeting with this tool) to have a node that is dedicated to postprocessing. It takes a texture (from a RenderTargetNode) as input, and applies a postprocessing effect (e.g. blur, bloom, ascii and so on) to it. The effect's shader parameters are exposed through the node GUI as animable input parameters.

Unfortunately I am also getting a bit busier with my graduate study, which means that I will have less time to spend on this project. However it looks like I will have the entire summer free to work on this project, which will definitely allow me to implement everything that is listed above.

... to be continued ...