Less than a year ago I started a major rewrite of the program. My main motivation was to switch from using .NET to C++ as the main programming language, and from WinForms to Qt for creating the graphic user interface. Another major goal was to be able to create visual content using both node and timeline based compositing techniques. This weekend I ended up implementing one of the last major required features of this revamped framework: that of reusable nodes. The idea is that if you have created visual content in one place in the program, that you can reuse that content in other places.

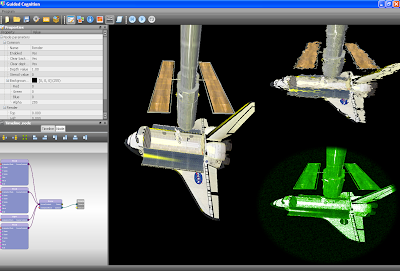

The screenshot above shows a simple example. This image shows a single 3d scene drawn in 3 different ways: without any effects (left), with a 'glass' postprocessing effect (top right), and with a 'nightvision' postprocessing effect (bottom right). In the bottom left it shows how various nodes are interconnected to construct the 3d scene. The four leftmost nodes comprise the scene elements (space shuttle, hubble telescope, astronaut, and a light source), which are all connected to a scene node shown near the center of the image. This scene node connects to a render node, which draws its contents to the screen.

The screenshot above shows how each of the postprocessing nodes references the same scene node. The original scene node's content is used as input for both of the effects ('glass' and 'nightvision') that are drawn on the screen. Any changes made to e.g. the space shuttle mesh node will immediately be reflected in all renderings of the scene, since they all share the same scene node. The framework allows any type of node (e.g. a RenderTarget node) to be shared in such a way, even though it may not make much sense to be able to share each type of node (e.g. a Light node or a Mesh node).

Even though the core functionality related to sharing nodes works, including saving/loading, there still is much work to do. Mainly with regards to testing: creating scenes, adding meshes, animating meshes, then re-using these scenes multiple times during post-processing steps. I am sure that this involves a lot of program crashes and necessary bug fixes. However I am fairly confident that the meat of the code is good now, I do not expect to be making many structural changes while preparing the framework for actual use in either my research or a demoscene production :-)

Other issues that I've been working on are:

As usual I'll end this post with a small to-do list, in no particular order:

The screenshot above shows a simple example. This image shows a single 3d scene drawn in 3 different ways: without any effects (left), with a 'glass' postprocessing effect (top right), and with a 'nightvision' postprocessing effect (bottom right). In the bottom left it shows how various nodes are interconnected to construct the 3d scene. The four leftmost nodes comprise the scene elements (space shuttle, hubble telescope, astronaut, and a light source), which are all connected to a scene node shown near the center of the image. This scene node connects to a render node, which draws its contents to the screen.

The screenshot above shows how each of the postprocessing nodes references the same scene node. The original scene node's content is used as input for both of the effects ('glass' and 'nightvision') that are drawn on the screen. Any changes made to e.g. the space shuttle mesh node will immediately be reflected in all renderings of the scene, since they all share the same scene node. The framework allows any type of node (e.g. a RenderTarget node) to be shared in such a way, even though it may not make much sense to be able to share each type of node (e.g. a Light node or a Mesh node).

Even though the core functionality related to sharing nodes works, including saving/loading, there still is much work to do. Mainly with regards to testing: creating scenes, adding meshes, animating meshes, then re-using these scenes multiple times during post-processing steps. I am sure that this involves a lot of program crashes and necessary bug fixes. However I am fairly confident that the meat of the code is good now, I do not expect to be making many structural changes while preparing the framework for actual use in either my research or a demoscene production :-)

Other issues that I've been working on are:

- GUI: added syntax highlighting for compositor and overlay scripts

- Engine: moved away from Cg in favor of Glsl. I just ran into too many related issues- not just bugs in the Cg compiler, but also limitations of running a Windows development environment in a virtual machine on a Mac.

- Switched from using VMWare to Parallells for running Windows in a virtual environment. VMWare imposed severe limitations on the graphics hardware features made available in the virtualized environment. Basically I was not able to use any deferred rendering, until I switched to Parallells.

As usual I'll end this post with a small to-do list, in no particular order:

- Testing, testing and more testing

- Add support for a GNU Rocket node. However since my GUI already supports editing of keyframe animation (position/orientation/scaling of a mesh or camera), I may generalize that functionality so that you can keyframe any floating point number. I think that with relatively a few lines of code I can create the same functionality as GNU Rocket. What I end up doing depends on which of the two options is the most effective (resulting functionality vs. effort required to implement it).

- I still dream of deferred particle renderers... now that my development environment actually supports them, I can actually start working on it :-)

- More testing.... Will it ever end?